This is the third and final installment (for now!) in my brief series on Internet issues. This time we’re addressing throughput, because it’s another one that comes up occasionally.

So here is the scenario, you’ve had a rack of equipment in a datacenter in New York for the last few months, and everything is going well. But you had a brief outage back in 2013 when the hurricane hit, and you’d like to avoid having an issue like that again in the future, so you order some equipment and lease a rack with a datacenter in Los Angeles and set to copying your data across.

Only, you hit a snag. You bought 1Gbps ports at each end, you get pretty close to 1Gbps from speed test servers when you test systems on each end, but when you set about transferring your critical data files you realize you’re only seeing a few Mbps, what gives?!

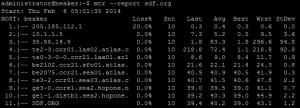

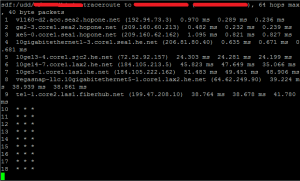

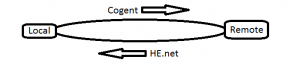

There are a number of things that can cause low speeds between two systems, it could be the system itself is unable to transmit or receive the data fast enough for some reason, which may indicate a poor network setup (half-duplex, anyone?) or a bad crimp on a cable. It could be that there is congestion on one or more networks between the locations, or in this case, it could simply be due to latency.

Latency, you ask, what difference does it make if the servers are right beside each other or half way around the world?! Chances are you are using a transfer protocol that uses TCP. HTTP does, FTP does, along with others. TCP has many pros compared with UDP, it’s alternative. The most common is that it’s very hard to actually lose data in a TCP transfer, because it’s constantly checking itself. In the event it finds a packet hasn’t been received it will resend it until either the connection times out, or it receives an acknowledgement from the other side.

[notice]A TCP connection is made to a bar, and it says to the barman “I’d like a beer!”

The barman responds “You would like a beer?”

To which the TCP connection says “Yes, I’d like a beer”

The barman pours the beer, gives it to the TCP connection and says “OK, this is your beer.”

The TCP connection responds “This is my beer?”

The barman says “Yes, this is your beer”

and finally the TCP connection, having drunk the beer and enjoyed it, thanks the barman and disconnects.[/notice]

UDP, on the other hand, will send and forget. It doesn’t care whether the other side got the packet it sent, any error checking for services using UDP will need to be built into the system using UDP. It’s entirely possible that UDP packets will arrive out of order, so your protocol will need to take that into account too.

[notice]Knock knock.

Who’s there?

A UDP packet.

A UDP packet who?[/notice]

If you’re worried about losing data, TCP is the way to go. If you want to just send the stream and not worry about whether it gets there in time or in order, UDP is probably the better alternative. File transfers tend to use TCP, voice and video conversations tend to prefer UDP.

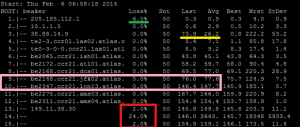

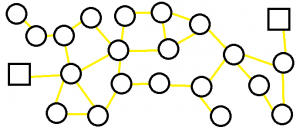

But that’s where TCP has it’s problem with latency: the constant checking. When a TCP stream sends a packet, it waits for an acknowledgement before sending the next one. If your server in New York is sending data to another server in Los Angeles, remember our calculation from last week? The absolute best ideal world latency you can hope for is around 40ms, but because we know that fiber doesn’t run in a straight line, and routers and switches on the path are going to slow it down, that’s probably going to be closer to 45 or 50ms. That is, every time you send a window of traffic, your server waits at least 50ms for the acknowledgement before it sends the next one.

The default window size in Debian is 208KB. The default in CentOS is 122KB. To calculate the max throughput, we need the window size in bits, and we divide that by the latency in seconds, so for Debian our max throughput from NY to LA is 212992*8[the window in bytes *8, 1,623,936] divided by 0.045 = 36087466.67bps, that’s 36mbps as a max throughput, not including protocol overhead. For CentOS we get 22209422.22bps, or 22mbps.

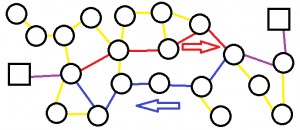

So for each stream, you get 22mbps between CentOS servers, when you’re paying for 1Gbps at each end, how can we fix this? There are three ways to resolve the issue:

1) Reduce the latency between locations, that isn’t going to happen because they can’t be moved any closer together (at least, not without the two cities being really unhappy) and we’re limited by physics with regard to how quickly we can transmit data.

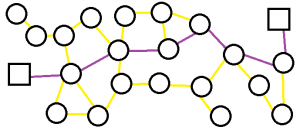

2) We can change the default window size in the operating system. That is, we can tell the OS to send bigger windows, that way instead of sending 208KB and waiting for an acknowledgement, we could send 1024KB and wait, or send 4096KB and wait. This has pros and cons. On the plus side, you spend less time per KB waiting for a response, meaning that if the window of data is successfully sent you don’t have to wait so long for the confirmation. The big negative side is that if for some reason any part of the packet is lost or corrupted the entire window will need to be resent, and it has to sit in memory on the sending side until it has been acknowledged as received and complete.

3) We can tell the OS to send more windows of data before it needs to receive an acknowledgement. That is to say that instead of sending just one window and waiting for the ack, we can send 5 windows and wait for those to be acknowledged. We have the same con that the windows need to sit in memory before they are acked, but we are still sending more data before we get the ack, and if one of those windows is lost then it’s a smaller amount of data to be resent.

All in all, you need to decide what is best for your needs and what you are prepared to deal with. Option 1 isn’t really an option, but there are a number of settings you can tweak to make options 2 and 3 balance out for you, and increase that performance.

On the other hand, you could also just perform your data transfer in a way that sends multiple streams of data over the connection and avoid the TCP tweaking issue altogether.